Introduction

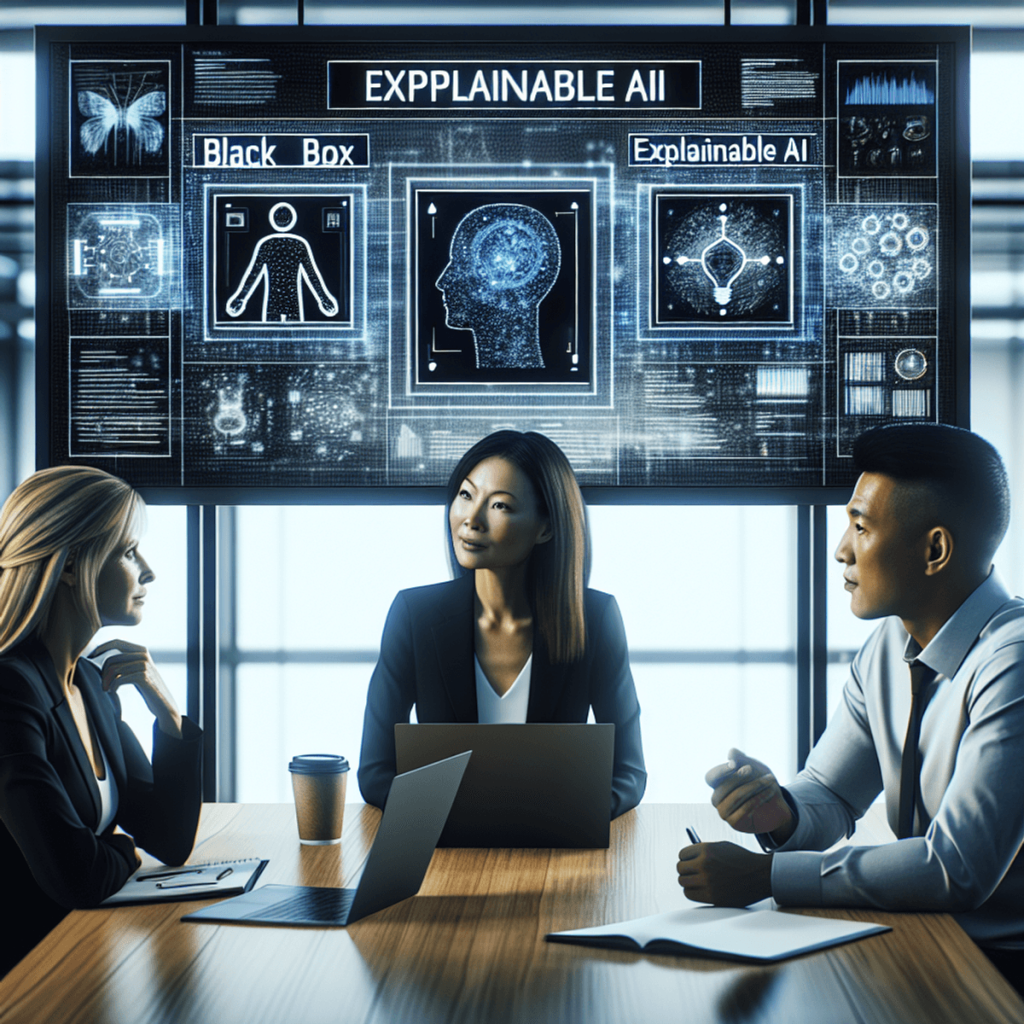

In the rapidly evolving world of Artificial Intelligence (AI), understanding the difference between Black Box AI and Explainable AI (XAI) models is crucial. Black box models, while powerful, operate in ways that are often opaque and difficult to interpret. In contrast, XAI emphasizes transparency and provides clear explanations for its predictions.

For industries like real estate and proptech, grasping these distinctions is vital. The choice between black box and explainable models can significantly impact accuracy, trust, and ethical considerations in AI applications.

In this article, you will learn:

- The defining features of black box models and their common use cases.

- The concept of comprehension debt and its implications.

- Risks associated with black box models, including biases and ethical concerns.

- How XAI techniques enhance AI transparency and trust.

- Practical steps for integrating XAI into organizational workflows.

- How Hello Here SL leverages both approaches to revolutionize property matching.

Understanding Black Box Models

Definition and Characteristics

Black-box models refer to AI systems, particularly those using deep learning algorithms, where the internal workings are not transparent to users. These models can make highly accurate predictions, but their decision-making process is not easily interpretable. For example, a neural network used in image recognition can identify objects with high precision but doesn’t provide insight into how it reaches its conclusions.

Common Use Cases

These models are used in various industries:

- Real Estate: Predictive analytics for property valuations and market trends.

- Finance: Risk assessment and fraud detection.

- Healthcare: Diagnostic tools for identifying diseases from medical images.

- Marketing: Customer segmentation and personalized recommendations.

Advantages of Deep Learning Techniques

Improved Accuracy and Scalability

- Black-box models excel in tasks requiring high accuracy due to their ability to learn complex patterns from large datasets.

- They offer scalability, making them suitable for applications involving massive amounts of data.

Example: Real Estate Market Analysis

In real estate, black-box models can analyze vast amounts of historical transaction data to predict future property values. This capability helps investors make informed decisions and enhances market efficiency.

Challenges Associated with Black Box Models

Hidden Biases

Despite their advantages, black-box models come with significant challenges:

- Hidden biases within the training data can lead to unfair or discriminatory outcomes.

Accountability Issues

- Lack of transparency poses accountability issues. When a model’s prediction is questioned, it is challenging to understand or explain the rationale behind the decision.

Example: Ethical Concerns in Real Estate

Imagine a property valuation model that consistently undervalues homes in certain neighborhoods due to biased training data. Such biases could perpetuate socio-economic disparities and lead to ethical concerns.

Understanding these characteristics and challenges is essential as we explore alternatives like Explainable AI (XAI) that aim to address these limitations while retaining high performance.

Understanding Comprehension Debt

Comprehension debt refers to the gap in understanding created by the use of black box models, where the inner workings of the model are opaque. This lack of interpretability and transparency can hinder decision-making processes.

In industries like real estate, decisions driven by AI models need clarity to ensure fair and accurate outcomes. When stakeholders can’t comprehend how a model arrives at its predictions, trust diminishes. For instance, property valuations generated by a black box model might be questioned if the reasoning behind the prices remains unclear.

The implications are significant:

- Trust Issues: Clients and users may be skeptical about adopting AI-driven recommendations.

- Regulatory Concerns: Industries must meet compliance standards that often require transparency in decision-making.

- Bias Identification: Without understanding the model’s logic, identifying and correcting biases becomes challenging.

Addressing comprehension debt is crucial for fostering trust and ensuring responsible AI deployment.

Risks and Concerns with Black Box Models

Black box models can lead to biased outcomes, raising significant ethical concerns. When model bias infiltrates algorithmic modeling, it can result in discrimination and unfair practices. For instance, a property recommendation system might favor certain demographics over others, creating inequalities in the real estate market.

Addressing these issues is crucial for maintaining model fairness. Diverse training data sets are essential to mitigate biases. Regular audits of AI systems ensure they operate equitably and transparently. By implementing these practices, organizations can reduce the risks associated with black box models and promote ethical AI usage.

Key steps to address model bias:

- Use diverse training data: Collect data from various sources to create a representative sample.

- Perform regular audits: Continuously evaluate AI systems to detect and correct biases.

Model fairness and transparency are not just ethical imperatives but also business necessities. By proactively addressing biases, companies can build trust and reliability in their AI-driven applications.

Exploring Explainable AI (XAI) Techniques

Explainable AI (XAI) techniques are designed to enhance transparency and interpretability in machine learning systems. XAI aims to demystify the decision-making process of AI models, enabling users to understand why an AI system made a specific recommendation or decision. By adopting XAI techniques, organizations can not only identify and rectify biases but also provide explanations to affected individuals, promoting accountability and trust.

Collaboration and Ethical Guidelines

Addressing model bias requires collaboration between stakeholders including data scientists, domain experts, and ethicists. Together, they can develop robust ethical guidelines that ensure fairness in AI systems. These guidelines should encompass ethical considerations from data collection to model deployment, reflecting the organization’s commitment to responsible AI practices.

Ongoing Monitoring and Adaptation

Finally, it is crucial to recognize that addressing model bias is an ongoing process. As societal norms evolve and new biases emerge, continuous monitoring and adaptation of AI systems become imperative. Regular audits, user feedback mechanisms, and open channels of communication with affected communities can help organizations stay vigilant and responsive to changing needs.

Conclusion

Model bias poses significant challenges in the development and deployment of Artificial Intelligence systems. However, by embracing diversity in training data, implementing XAI techniques, fostering collaboration, and maintaining vigilance through ongoing monitoring, organizations can mitigate biases and build fairer and more trustworthy AI solutions.bling users to understand, trust, and effectively manage these systems. This is particularly crucial in industries like real estate where AI listing can significantly impact decisions.

The Role of Explainable AI

Explainable Artificial Intelligence provides clarity on how an AI model arrives at specific conclusions. It addresses one of the main criticisms of black box models: their opacity. By making the decision-making process transparent, XAI fosters greater trust among users and stakeholders. This transparency is key for ensuring ethical practices and accountability in AI applications.

Techniques in Explainable AI

Various techniques are employed in XAI to elucidate model predictions:

- LIME (Local Interpretable Model-agnostic Explanations): LIME explains individual predictions by approximating the black box model locally with an interpretable model. It works by perturbing the input data, observing changes in predictions, and then fitting a simpler model that mimics the behavior of the complex one within that locality.

- Example: In a property matching app like Hello Here, LIME can help explain why a specific property was recommended by showing which features (e.g., location, price) were most influential for that recommendation.

- SHAP (SHapley Additive exPlanations): SHAP values provide a unified measure of feature importance based on cooperative game theory. Each feature’s contribution to the prediction is calculated by considering all possible combinations of features.

- Example: For a real estate platform using SHAP, users can see detailed explanations for property valuations or recommendations, understanding how each feature (like square footage or neighborhood safety) contributed to the final decision.

Benefits of XAI

Incorporating XAI techniques into real estate platforms like Hello Here not only enhances user experience but also promotes responsible use of AI technologies across the industry.

Benefits of XAI include:

- Transparency: Clear insights into AI decisions enhance user trust.

- Accountability: Easier identification and correction of biases or errors.

- User Empowerment: Users gain better control over automated systems.

Comparing Black Box Models vs. Explainable AI (XAI) Strategies

Transparency and Interpretability

Black box models and explainable AI (XAI) differ significantly in terms of transparency and interpretability. Black box models, such as deep neural networks, are complex and often lack transparency, making it difficult to understand how they arrive at specific decisions. This opacity can be a drawback in industries where understanding the decision-making process is crucial.

In contrast, XAI techniques aim to make AI systems more transparent and interpretable. By providing clear explanations for predictions, XAI enhances trust and accountability. Techniques like LIME and SHAP break down complex model outputs into understandable insights, enabling stakeholders to comprehend the underlying factors influencing decisions.

Trade-Offs

- Accuracy vs. Interpretability: Black box models often achieve higher accuracy due to their complexity, but this comes at the cost of interpretability. XAI models might sacrifice a bit of accuracy for the sake of providing understandable results.

- Scalability vs. Transparency: Black box models are highly scalable and can handle vast datasets efficiently. However, the lack of transparency may pose risks in sensitive applications. XAI methods prioritize transparency but might require additional computational resources.

- Speed vs. Accountability: In real-time applications, black box models offer quicker predictions but lack the accountability that XAI provides through detailed explanations.

Understanding these trade-offs between black-box and white-box models is essential when choosing an AI strategy that aligns with organizational goals and ethical considerations.

Implementing Effective Explainable Artificial Intelligence Strategies

Integrating explainable AI (XAI) into an organization’s workflow is crucial for enhancing transparency and trust. Start by adopting a phased approach that ensures a smooth transition from black box models to XAI.

Steps to Implement Explainable Artificial Intelligence:

- Begin with Simpler Models:

- Use linear regression or decision trees to create a foundation.

- These models are inherently more interpretable, providing immediate benefits of transparency.

- Train and Validate:

- Ensure diverse training datasets to mitigate biases.

- Regularly validate model performance and explanations with domain experts.

- Adopt Model-Agnostic Techniques:

- Implement methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations).

- These techniques offer insights into any model’s behavior without altering its architecture.

- Develop a Feedback Loop:

- Create mechanisms for continuous feedback from stakeholders.

- Adapt models based on user insights to improve both accuracy and interpretability.

- Educate and Train Teams:

- Provide comprehensive training for teams on the importance of explainability.

- Equip them with tools and knowledge to leverage XAI effectively in their workflows.

- Document Everything:

- Maintain detailed documentation of model decisions, data sources, and validation processes.

- This transparency builds trust and allows for easier audits.

By following these steps, organizations can seamlessly integrate XAI, balancing the need for advanced analytics with the imperative of interpretability.

The Future of Artificial Intelligence-Powered Property Search with Hello Here SL

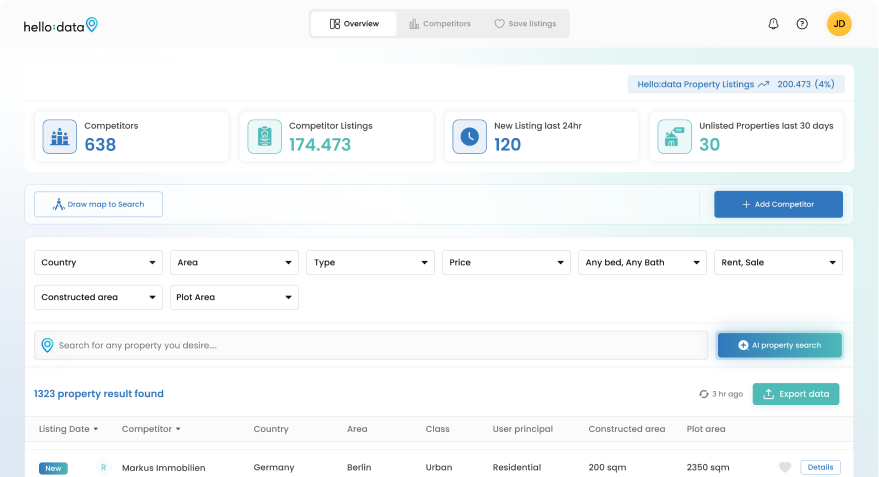

Hello Here SL is leading the way in real estate by using both black box and explainable AI (XAI) strategies. This combination improves their property matching platform, changing how users find homes.

Key Innovations:

- Black Box Models: Using deep learning techniques to analyze large amounts of real estate data. These models offer unmatched accuracy and scalability, allowing the platform to handle extensive datasets seamlessly.

- Explainable AI (XAI): Incorporating XAI methods like LIME and SHAP to provide transparency and trust. Users can understand the reasons behind property matches, building greater confidence in the AI’s recommendations.

Potential Impact on Global Real Estate Market

Hello Here SL’s approach aims to transform property search by:

- Efficiency: Making the property search process easier like dating apps match people. This simplifies user experience, making it intuitive and engaging.

- Data Richness: Outperforming competitors such as Casafari and Idealista by gathering four times more listings, giving users a wider choice of properties. This data richness is a key factor in distinguishing Hello Here SL from other platforms.

- Global Reach: Targeting worldwide markets with a focus on innovation, setting new standards for AI-driven real estate platforms.

By combining the power of black box models with the transparency of XAI, Hello Here SL is poised to redefine property matching, offering unmatched efficiency and trust in the global real estate market. This change is part of a larger trend where Artificial Intelligence in Real Estate is transforming every aspect of buying, selling, and managing properties. Furthermore, the strategic process of market segmentation made possible by AI is enabling real estate professionals to better understand and cater to their clients’ needs and preferences.

Conclusion: Embracing Responsible Artificial Intelligence Development Across Industries

Businesses must prioritize transparency in their use of AI technologies. Tools like those offered by Hello Here SL demonstrate the value of integrating both black box models and explainable AI (XAI) strategies to achieve this balance.

- Future trends in explainable AI point towards increasing adoption across various sectors.

- Emphasizing transparency and accountability ensures ethical practices and fosters trust.

As we navigate the differences between black box vs. explainable AI (XAI) models, it’s evident that responsible AI development is not just a necessity but a competitive advantage. The potential trajectory for ethical AI extends beyond real estate, impacting numerous industries globally.